5 Best Apache Airflow Tutorials & Courses to Learn in 2025

Are you looking for the best Apache Airflow tutorials, courses, and training programs in 2025?

Apache Airflow is one of the most powerful open-source workflow orchestration tools for managing data pipelines. It allows developers and data engineers to define, schedule, and monitor workflows using Directed Acyclic Graphs (DAGs) written in Python.

Think of Airflow as the manager of your data tasks, ensuring each job runs at the right time, in the right order, with the right resources. Whether you're running ETL jobs or orchestrating machine learning pipelines, Airflow makes complex processes easier to control.

It's widely used in data engineering, automation, and ML workflows, and is often integrated with tools like Spark, Hadoop, and Kubernetes.

Keeping this in mind, here at Coursesity, we’ve curated some of the best online Apache Airflow tutorials and courses with certification. Whether you’re just starting or looking to deepen your Airflow skills, you’ll find a course below to help you author, schedule, and monitor complex data workflows.

Top Apache Airflow Tutorials, Courses & Certification (2025)

Here is a curated list of the best Apache Airflow tutorials and training programs available online. These courses will help you learn how to build, schedule, and monitor workflows using Apache Airflow — from beginner-friendly guides to production-level implementations.

1. The Complete Hands-On Introduction to Apache Airflow

Learn Apache Airflow from scratch with a practical, step-by-step approach to building DAGs and scheduling workflows.

2. Productionalizing Data Pipelines with Apache Airflow

Understand how to structure, scale, and maintain data pipelines in production using Airflow.

3. Apache Airflow on AWS EKS: The Hands-On Guide

Explore how to run Airflow on Kubernetes with AWS EKS for scalable and cloud-native orchestration.

4. Apache Airflow

A comprehensive look at core Airflow concepts, covering scheduling, triggering, sensors, and operators.

5. Apache Airflow: The Hands-On Guide

Build and run real-world DAGs, integrate Airflow with Python scripts and monitor task execution like a pro.

Disclosure: We're supported by the learners and may earn from purchases through links.

1. The Complete Hands-On Introduction to Apache Airflow

Instructor: Marc Lamberti

- Course Rating: 4.4 out of 5.0

- Students Enrolled: 72,900+

- Duration: 3.5 Hours

- Certification: Yes

- Price: ₹3,099 (may vary with coupons)

- Best For: Data engineers and Python developers looking to master workflow orchestration using Apache Airflow from scratch.

Whether you're new to workflow orchestration or want to scale your pipeline automation skills, this is a comprehensive, hands-on Apache Airflow training for real-world data engineering tasks.

You'll start with the basics of Apache Airflow, understanding DAGs, tasks, and scheduling, and gradually move into more advanced topics like XComs, branching logic, plugins, Dockerized deployments, and integration with Big Data tools like Hive and Elasticsearch. The course is packed with exercises and real-world examples to help you apply each concept practically.

In this Apache Airflow course, you will learn:

- How to install, configure, and run Apache Airflow

- Creating and scheduling DAGs using Python

- Understanding Operators, Tasks, Triggers, and Workflows

- Using advanced concepts like XComs, branching, and SubDAGs

- Running Airflow with different Executors: Sequential, Local, Celery

- Using Docker to containerize Airflow projects

- Integrating Airflow with Big Data tools: Hive, PostgreSQL, Elasticsearch

- Building plugins to extend Airflow's core functionality

- Real-life scenarios for data pipeline orchestration and monitoring

Pros:

- Fully hands-on and project-driven

- Includes both basic and advanced Airflow concepts

- Great explanation of Executors and deployment modes

- Taught by a seasoned data engineer

Cons:

- Requires basic Python knowledge

- Some exercises may feel complex for complete beginners

2. Productionalizing Data Pipelines with Apache Airflow

Instructor: Axel Sirota

- Course Rating: 4.6 out of 5.0

- Level: Intermediate

- Duration: 2h 13m

- Certification: Yes (with Pluralsight plan)

- Price: ₹1,499/month (Pluralsight subscription) — 10-day free trial available

- Best For: Data engineers looking to make Airflow pipelines production-ready with Kubernetes & Celery Executors.

This course teaches you how to master production-grade data pipelines using Apache Airflow. Starting from pipeline design fundamentals, it focuses on making DAGs resilient, scalable, and ready for real-world deployment using Kubernetes and Celery Executors.

In this Apache Airflow course, you will learn:

- Build and manage scalable Apache Airflow DAGs

- Abstract and modularize pipeline functionality

- Apply best practices for production-ready pipelines

- Handle DAG pitfalls and edge cases

- Use Celery and Kubernetes Executors in Airflow

Pros:

- Focused on real-world production scenarios

- Covers scale, reliability, and performance optimization

- Teaches debugging and deployment techniques

Cons:

- Intermediate level, not ideal for complete beginners

- Requires some prior understanding of data pipelines or Airflow basics

3. Apache Airflow on AWS EKS: The Hands-On Guide

Instructor: Marc Lamberti

- Course Rating: 4.4 out of 5.0

- Students Enrolled: 5,270+

- Duration: 7.5 Hours

- Certification: Yes

- Price: ₹3,399 (may vary with coupons)

- Best For: Experienced Airflow users who want to deploy production-grade architecture on AWS.

Struggling to run Apache Airflow on AWS? This course helps you deploy a production-ready Airflow architecture using AWS EKS with the Kubernetes Executor and Helm. Instead of basic DAG tutorials, you'll learn how to configure cloud-native infrastructure, manage sensitive data, and build scalable pipelines.

In this Apache Airflow course, you will learn:

- Deploy Airflow on AWS EKS using Helm

- Configure Kubernetes Executor for production use

- Automate deployments using GitOps

- Enable remote logging with AWS S3

- Use AWS CodePipeline for CI/CD DAG deployment

- Store DAGs using Git-Sync and AWS EFS

- Secure credentials using Secret Backends

- Create separate dev/staging/prod environments

- Build scalable and highly available architectures

Pros:

- Real-world production architecture

- Strong focus on automation & scalability

- Includes CI/CD and logging setup

Cons:

- Not beginner-friendly

- Not free-tier eligible (uses multiple AWS services)

4. Apache Airflow

Instructor: A to Z Mentors

- Course Rating: 4.4 out of 5.0

- Students Enrolled: 2,460+

- Duration: 6 Hours

- Certification: Yes

- Price: ₹2,859 (may vary with coupons)

- Best For: Beginners to intermediate learners who want a full walkthrough of Apache Airflow with real-time project use cases.

This course offers a complete A-to-Z guide on Apache Airflow, covering everything from DAGs to real-time pipeline deployment. It's ideal for those transitioning from traditional schedulers (like Oozie or Cron) and looking to build confidence in production-grade workflows.

In this Apache Airflow course, you will learn:

- Author, schedule, and monitor workflows with Airflow

- Master core components: DAGs, Tasks, Executors, Operators

- Work with advanced features like XComs, SubDAGs, Sensors, and Hooks

- Understand exclusive topics: Data Profiling, Trigger Rules, airflowignore, Zombies

- Build and deploy a real-time pipeline with Docker

- Learn practical do’s & don’ts for real-world airflow use

Pros:

- Beginner-friendly with hands-on demos

- Covers official and undocumented Airflow features

- Includes project files and quick query support

Cons:

- Basic Python required

- Slightly broad focus may overwhelm absolute beginners

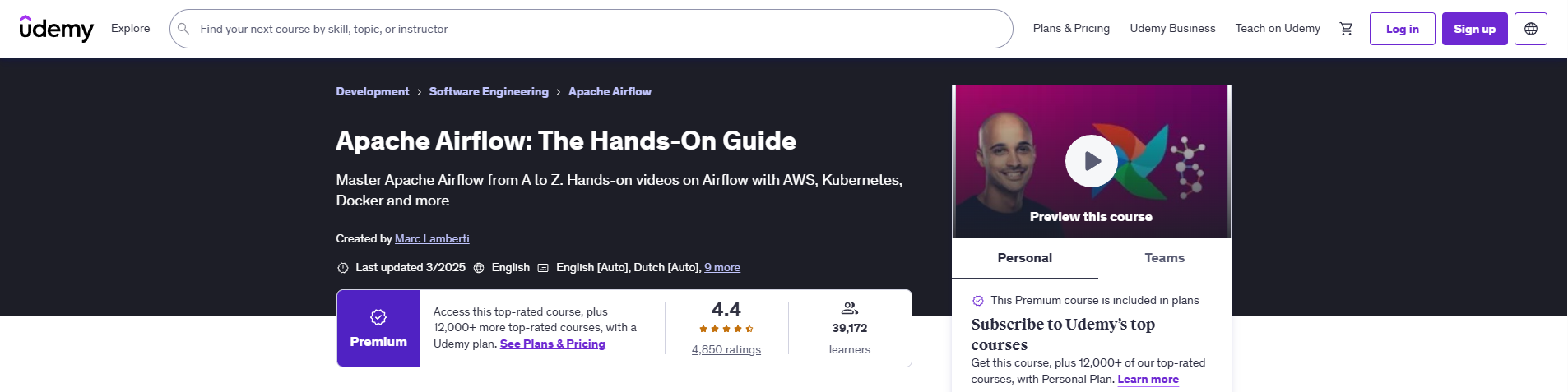

5. Apache Airflow: The Hands-On Guide

Instructor: Marc Lamberti

- Course Rating: 4.4 out of 5.0

- Students Enrolled: 39,170+

- Duration: 11 Hours

- Certification: Yes

- Price: ₹3,109 (may vary with coupons)

- Best For: Intermediate to advanced users ready to scale and secure production-grade workflows with Airflow and Kubernetes.

Learn how to scale, secure, and monitor Apache Airflow in production environments using Docker and Kubernetes. This course walks you through setting up both local and cloud-based Airflow clusters (AWS EKS), designing real-world pipelines, and implementing RBAC, observability, and best practices.

In this Apache Airflow course, you will learn:

- Core concepts like scheduler, webserver, and Airflow architecture

- DAG structuring, unit testing, templating, and dependencies

- Scaling Airflow using Local, Celery, and Kubernetes Executors

- Setting up Kubernetes clusters both locally (with Rancher) and in AWS (with EKS)

- Working with Elasticsearch and Grafana for Airflow monitoring

- Airflow security (RBAC, UI authentication, data encryption)

- Real-world Forex Data Pipeline project integrating Spark, Hadoop, and Slack

- Practical exercises, quizzes, and best practices throughout the course

Pros:

- Ideal for mastering the Kubernetes Executor setup in production

- Includes observability, security, and RBAC configuration

- Instructor support is fast and consistent

Cons:

- Not beginner-friendly; assumes Docker and Python familiarity

- Setup for Kubernetes clusters may feel overwhelming for some users

FAQs About Apache Airflow

1. What is Apache Airflow used for?

Apache Airflow is used to programmatically author, schedule, and monitor workflows. It helps automate data pipelines, making it ideal for ETL jobs, batch processing, machine learning, and other complex data workflows.

2. How does Apache Airflow work?

Airflow works by executing tasks defined in DAGs (Directed Acyclic Graphs). These tasks are written in Python and scheduled by the Airflow scheduler. The UI helps users monitor execution status, logs, and task dependencies.

3. How is Apache Airflow different from other tools?

Unlike cron or manual schedulers, Airflow lets you define dependencies between tasks, retry logic, parallel execution, and supports scalability with different executors like Celery and Kubernetes.

4. How do I install Apache Airflow?

You can install Apache Airflow using Python's pip package manager with environment constraints. It's commonly installed via:pip install "apache-airflow==2.X.X" --constraint "https://raw.githubusercontent.com/apache/airflow/constraints-2.X.X/constraints.txt"

5. Can Apache Airflow run on Windows?

Yes, Apache Airflow can run on Windows using WSL (Windows Subsystem for Linux), Docker, or virtual environments. However, it’s officially recommended to use Linux-based systems for production.

6. Where can I learn Apache Airflow?

You can learn Apache Airflow through platforms like Udemy, Pluralsight, and Coursera, or directly from the official Airflow documentation. This blog lists beginner-to-advanced tutorials with certification.

7. Who uses Apache Airflow?

Apache Airflow is used by data engineers, machine learning engineers, and DevOps teams across companies like Airbnb, Lyft, Google, and many more for automating and scaling data workflows.

8. Why use Apache Airflow instead of cron jobs?

Cron jobs offer limited control. Airflow provides a full scheduling framework with monitoring, retries, parallel execution, failure alerts, task dependencies, and integrations with cloud/data tools.

9. What is a DAG in Apache Airflow?

A DAG (Directed Acyclic Graph) is a collection of tasks with dependencies and an execution order defined. Each DAG represents a workflow and is written in Python.

10. Is Apache Airflow beginner-friendly?

Yes. While it requires basic Python knowledge, many beginner courses walk you through installation, writing your first DAG, and working with Airflow's UI and operators step by step.

You Might Also Like

We hope you found this list of Apache Airflow tutorials and training programs helpful. If you're exploring more tools in data engineering, backend development, or automation, here are a few other resources you might enjoy:

Keep learning — Coursesity has you covered!